All the information transmitted through the Internet, between the routers, servers and other hosts, is split into smaller chunks of data known as packets. Every packet consists of a header and content. If we need to explain this by using an analogy, we should think about those packets as a traditional paper envelope where the letter inside is the content and the stamps and the addresses written on the outside are the headers. Without an address written on the envelope, the letter will never reach the intended destination. Similar to a post office, the ISP’s router examines the destination address of each packet and determines where to send it. As we said, those “addresses written on the envelope” are called headers and they are one type of metadata.

On a sunny morning at 7:45:03, one Internet packet is born. 60 bytes weight, with just one simple mission in life – to get to the place called 173.252.120.6. Even though this does not sound like an exciting mission in life, things that happen in the next 1 second are pretty exciting. His journey starts with a fast 7ms jump, 5 meters away to the box called home router. Over the attic, where he passes through the switch where all the cables from the building meet, he jumps down to the street and into the underground cable that brings him to the main city router in Novi Sad. With a speed of 30.600.000 km/h he runs for 10 ms to Belgrade, to the SBB TelePark building.

89.216.8.141 SBB TelePark, Belgrade, RS (Photo: Google StreetView)

89.216.8.141 SBB TelePark, Belgrade, RS (Photo: Google StreetView)

He jumps around a few routers inside of the building and then leaves the country, travels for 0,05s through the tunnel in the direction of Frankfurt, Germany. Frankfurt is a really popular destination nowadays for young Internet Packets born in Serbia. Almost 50% of them at some point of their really short life, pass through the DeCIX, the biggest Internet Exchange Point (IXP) in the world1 with an average 2523 Gigabits of traffic per second2. This is the place where more than 600 ISPs from more than 60 countries meet and connect, something like airports for the Internet.

In his long distance journey our internet packet will jump from one “crossroad” of the Internet to another, passing different countries, invisible borders and visiting big, gray, dehumanized buildings in the suburbs of the cities. The European IXP scene today consists of some 150 IXPs and represents an impressive spectrum of players, ranging from the largest IXPs worldwide3 to up-and coming IXPs and critical regional players4 all the way to small local IXPs that can be found all across Europe5.

80.81.194.40 – Equinix, Lärchenstr. 110, 65933 Frankfurt – DE-CIX premium enabled site (Photo: Google StreetView)

80.81.194.40 – Equinix, Lärchenstr. 110, 65933 Frankfurt – DE-CIX premium enabled site (Photo: Google StreetView)

80.81.194.40 – Equinix, I.T.E.N.O.S. KPN, Level3, Telehouse , Kleyerstrasse 79-90, Frankfurt. – DE-CIX (Photo: Google StreetView)

80.81.194.40 – Equinix, I.T.E.N.O.S. KPN, Level3, Telehouse , Kleyerstrasse 79-90, Frankfurt. – DE-CIX (Photo: Google StreetView)

After the visit to the biggest internet exchange point in the world our packet is off to Dublin, Ireland, passing through the TelecityGroup carrier – neutral data center specialized for bandwidth intensive applications, content and information hosting.

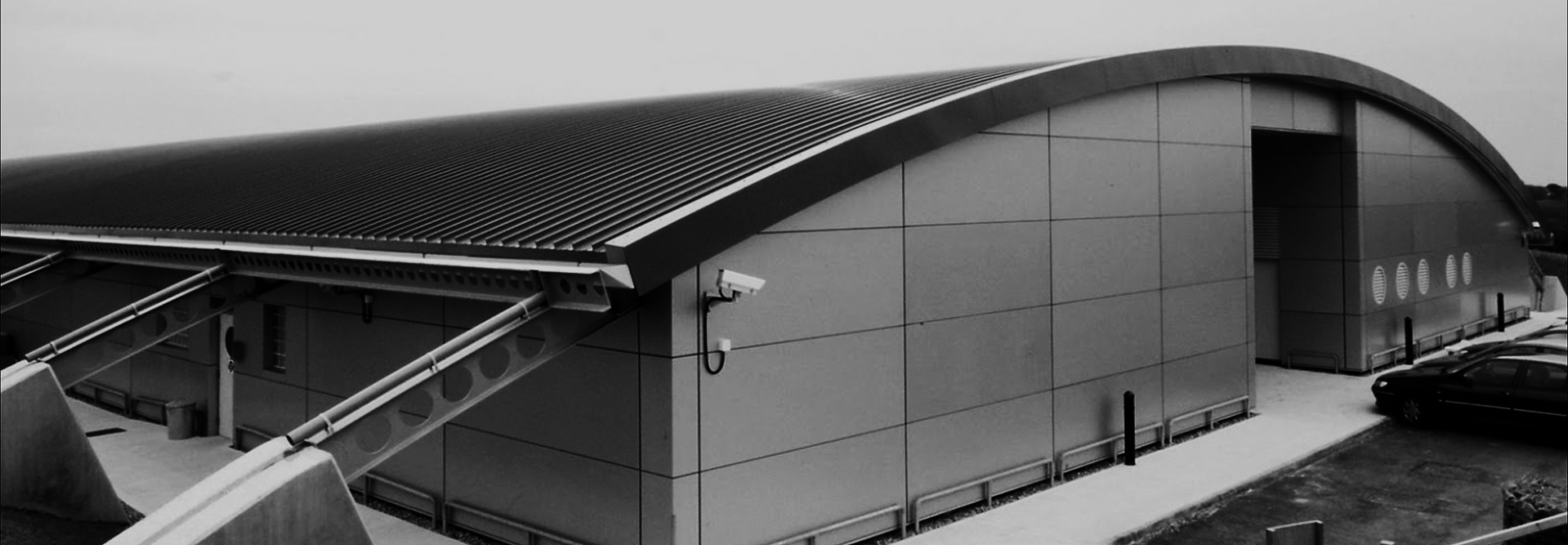

31.13.30.211 TelecityGroup, Dublin, IR (Photo: Google StreetView)

31.13.30.211 TelecityGroup, Dublin, IR (Photo: Google StreetView)

Some destinations on the path of our Internet packet are hidden for us, numerous repeaters, network equipment and intermediate routers on the way do not reveal their existence on our tracerouting results. Most of this invisible equipment on the way is there to make this travel possible, keeping the speed of packets constant or just connecting two cables, but some of the equipment on the way are hidden from us for other reasons. In the 1970s, Skewjack farm in west Cornwall, England, at the coast of the Atlantic ocean was known as a cult place for sea-surfing enthusiasts – the Skewjack Surf Village. Unfortunately, the surf village was closed in 1986 and this place became known for another kind of surfing, web surfing, or to be more precise – an extended form of web surfing voyeurism and hoarding. This farm is situated just a few kilometers from one really important place for the Internet, Widemouth Bay south of Bude, landing spot for some of the biggest and most crowded transatlantic optic cables, connecting Europe and US, one of the backbones of today’s Internet. Before the Internet packet dives deep beneath the ocean, he will most likely jump to the bunker-like building at the Skewjack farm.

Skewjack, UK (Photo: Google Maps)

Skewjack, UK (Photo: Google Maps)

It was revealed in 2014 that this farm was the location of the Government Communications Headquarters interception point that copies data to GCHQ Bude, an even more visually exciting farm, populated with tens of huge satellite dishes that serve as a satellite ground station and eavesdropping centre. There is an estimation that 25% of all internet traffic travels through this point6.

GCHQ Bude, England (Photo: Google Maps)

After a quick detour, our packet goes into a transatlantic cable landing site 10 km away at the Widemouth Bay, near a small coastal city of Bude, a place with one of the biggest concentration of transatlantic optic cable landing sites in the World.

Before 1866, information traveled from one side of the Atlantic to another only by ship, and this sometimes took weeks. The first attempt in 1858 of laying a 2,000-mile copper cable along the ocean bottom was successful but was operational for only three weeks, when it was destroyed after having experienced many technical difficulties7. It took nine years and five attempts to succeed in building the transatlantic telegraph cable “The Eighth World Wonder”, technology that will rapidly transform communication between continents and create the first worldwide communication network.

Cable Station, Valentia Island Ireland (Photo: Google StreetView)

The 1866 trans atlantic telegraph cable, laid down between Valentia Island in Ireland and Heart’s Content in Newfoundland US, could transfer 8 words a minute, and initially costed $1008 to send 10 words9 . In 1900, the shape, topology of the telegraph network10 looked very similar to the submarine telecommunication optic that we have today11 . The main landing points of this network, made of thousands of kilometers of optic cables, are shaped by geographical conditions as well as political and economical power – the power to access, transfer and store informations, to participate in the data and metadata exploitation industry and surveillance-industrial complex.

It’s hard not to be seduced with the magic of those tiny streams of data traveling with a speed of light on the ocean floor. Different data streams are separated in different frequency of light, allowing enormous amounts of data to be transferred, traveling with speed of, in case of our packet, 50.000.000 m/s12 . In the past 150 years, speed of transatlantic communication jumped from the metric of weeks to the fraction of a second, far beyond human perception, making the process of information transfer abstract and invisible. Still, for the high frequency trading algorithms, responsible for a half of the European Union and United States stock trades, every millisecond lost in transfer of data plays a crucial role, pushing for faster and more sophisticated solutions in data transfers.

Tuckerton NJ, TAT 14 Landing point (Photo: Google Map)

There are a couple of main spots for cable landing on the other side of the ocean. They are mostly situated on the east side of Long Island (Brookhaven), Manasquan and Tuckerton in New Jersey, an hour and a half drive south from New York city. Our Internet packet is now heading south, towards another Internet capital – Ashburn, Virginia, 50 km northwest of Washington, D.C.

At first, the Internet backbone was maintained by the US government, runned by the National Science Foundation and was used by the academic or educational communities and institutions. Their supercomputing initiative, launched in 1984, was designed to make high performance computers accessible to researchers around the US13 and in 1986 this 56 kbit/s backbone was connecting scientific centers across US. But this backbone was prohibited for growing number of commercial ISPs by the NSFNET Acceptable Use Policy14. In the beginning of the 90s commercial ISPs needed to find a way to make a physical connection between themselves in order to exchange traffic over their private infrastructure, avoiding government owned backbone. They came up with a common, neutral physical locations where they would connect their networks, some kind of a informational highways’ roundabout. One of the first such locations was Ashburn, suburb of Washington, D.C, populated with numerous technology startups, military and government contractors. MAE (Metropolitan Area Exchange) created in 1992, fast became one of the biggest crossroads in the Internet history, with most of the world’s Internet traffic passing through it at some point, creating a sort of an Internet black hole. The 5th floor of a building on Tysons Corner became a bottleneck of the Internet.

The opening of the network access points also marked an important philosophical shift, one that would have ramifications for its physical structure. In a clear departure from its original roots, the Internet was no longer structured as a mesh, but rather entirely depended on a handful of centers15.

Even though it is no longer as influential as it was in the beginning of 90s, Ashburn is still one of the Internet capitals, home of a large number of data centers, a strategic communications hub for the eastern United States, a major communications gateway to Europe and the largest Internet peering point in North America.

Equinix, 44470 Chilum Place, Ashburn, VA

Equinix, 44470 Chilum Place, Ashburn, VA

After a visit to the former Internet capital, our Internet packet heads 700 km southwest, to his final destination – Forest City in North Carolina. Forest City – a home to 7,500 residents and hundreds of millions of user profiles. Physical manifestation of Facebook. The world’s biggest database of personal informations, private and public photos, intimate chats, thoughts and emotions packed into two massive 28.000 square meters facilities filled with hard drives, routers, wires and cooling systems.

31.13.29.232 Facebook Data Center, Forest City, North Carolina, US (Photo: Google StreetView)

31.13.29.232 Facebook Data Center, Forest City, North Carolina, US (Photo: Google StreetView)

Only 80 full-time employees working three shifts are needed to run these gigantic gray buildings. Thanks to the automation systems16, one technician can take care of about 25,000 servers that work in complete dark, lights turning on only when sensors detect movement. Not far from this place there are other big facilities, created with the same goal, similar in size but operated by Google (in Lenoir) and Apple (in Maiden).

Google data center, Lenoir, North Carolina, US (Photo: Google StreetView)

Google data center, Lenoir, North Carolina, US (Photo: Google StreetView)

Those are the locations where your data actually exists. Data centers are monopolies of collective data, accumulation of information about information17.Those are the locations where metadata society accumulates wealth, consisted of vast amounts of information, created by us and analyzed by them.

This is the end point of the exciting 1-second-long life and journey of our Internet packet. In only one second, he traveled over 9000 km and crossed numerous borders, being transferred from one ISP to another, operating under different legal frameworks and commercial interests, jumping from one Internet crossroad to another and leaving a trace of his existence at every point of his path. The life mission of this packet was simple, he was created to send information to facebook.com that a user, somewhere in Serbia typed www.facebook.com in his browser. Once at his fated destination he will trigger birth and send out on a journey a certain amount of new packets, filled with informations that will travel in the opposite direction, from the Facebook data center to the user’s computer, resulting in a Facebook page being shown on his screen in a blink of a second.

Ghosts and the afterlife of Data

At his final destination our packet will be stored, buried to rest in a dark, cold room of the data center among other billions of packets, waiting to eventually have an afterlife, to be a subject of algorithmic analysis. But this is not the only place where he will be stored. On his journey, at numerous points he was cloned and stored in other data centers, ISPs’ data retention servers in different countries by different government agencies or commercial companies. He will eventually be used in different ways, as a piece of the big puzzle presenting your behavior, preferences and interests or as a little piece that will differ you from or mark you as a potential terrorist in the eye of the algorithm. On the other side, our little Internet packet will contribute to the fast growing industry of personal data collection, analysis and trade. The estimated value of EU citizens’ data was €315bn in 2011 and has the potential to grow to nearly €1tn annually by 202018.

- http://en.wikipedia.org/wiki/List_of_Internet_exchange_points_by_size

- http://www.de-cix.net/

- e.g. AMSIX, DE-CIX, LINX

- Netnod, UA-IX, ESPANIX, PLIX, France-IX, ECIX, VIX, SOX

- On the importance of Internet eXchange Points for today’s Internet ecosystem http://cryptome.org/2013/07/ixp-importance.pdf

- GCHQ Bude was featured extensively in the September 11, 2014, BBC2 Horizon television programme: Inside the Dark Web.This programme estimated that 25% of all internet traffic travels through Cornwall, England.

- When Wildman Whitehouse applied massive shocks of 2,000 volts in an attempt to achieve faster operation, probably one of the first and biggest “overclocking” fails in history.

- That was 10 weeks’ salary for a skilled workman of the day. After inflation, $100 translates to about $1,340 today.

- http://royal.pingdom.com/2008/03/05/transatlantic-cable-handled-8-words-a-minute-in-1866/

- https://raoulpop.files.wordpress.com/2009/02/1901-telegraph-cable-map1.jpg

- http://www.submarinecablemap.com/

- 5000km in around 100ms or 50.000.000 m/s or 180.000.000 km/h

- https://www.nsf.gov/about/history/nsf0050/internet/launch.htm

- http://www.cybertelecom.org/notes/nsfnet.htm#aup

- Tubes, Andrew Blum

- http://www.enterprisetech.com/2014/04/22/open-compute-full-bloom-facebook-north-carolina-datacenter/

- Matteo Pasquinelli – Anomaly Detection: The Mathematization of the Abnormal in the Metadata Society

- Boston Consulting Group, “The Value of our Digital Identity”, November 2012